Ethical Implications of AI in Social Media Law Enforcement

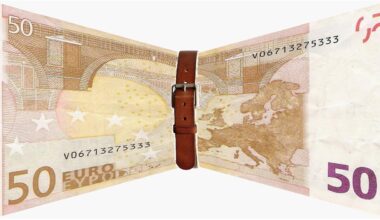

The advent of artificial intelligence (AI) in social media law enforcement has raised numerous ethical concerns, particularly regarding privacy, bias, and accountability. These issues are paramount as law enforcement agencies increasingly rely on AI algorithms to analyze user-generated content. The potential for privacy invasion is significant when AI sifts through personal messages and posts to identify threats or criminal activities. Furthermore, bias in algorithms can lead to disproportionate targeting of specific groups, raising questions about the fairness and justice of such practices. Reports have highlighted instances where AI systems used by social media platforms inadvertently reflect societal biases, leading to ethical concerns regarding discrimination and profiling. As technology progresses, it is essential to ensure that AI applications are developed and deployed responsibly, keeping in mind ethical standards. Accountability is another critical aspect of this discussion, as accountability mechanisms must be in place to address potential harms caused by AI decision-making. Policymakers, companies, and society must work together to establish ethical guidelines governing the use of AI in law enforcement to prevent misuse and protect civil liberties. Only then can the integration of AI serve the public good without compromising ethical standards.

As AI becomes increasingly integrated into social media law enforcement, the balance between security and civil liberties comes into sharp focus. Law enforcement agencies leverage AI to monitor online behavior, identify potential threats, and even predict criminal activity based on patterns. While these capabilities aim to enhance public safety, they also risk infringing on individual rights. For example, the use of surveillance technology, such as facial recognition systems, raises significant concerns regarding consent and the right to privacy. Many citizens may not be aware that their online activities are subject to scrutiny, lacking clarity on how their data is used. Additionally, there is an ongoing debate about the ethical implications of predictive policing facilitated by AI, which could unfairly target specific communities leading to over-policing. The extent of surveillance and monitoring can create a chilling effect on free expression, as users may self-censor due to fear of repercussions. Thus, it is critical for stakeholders to engage in a comprehensive dialogue on establishing clear boundaries surrounding the surveillance capabilities of AI. Transparency and public awareness can foster a better understanding of the line between safety and personal freedoms in this technology-driven landscape.

Addressing Bias in AI Algorithms

The presence of algorithmic bias in AI systems represents a significant ethical concern in social media law enforcement. Bias can arise during various phases, including data collection, algorithm design, and implementation. For instance, if the data used to train AI models predominantly features information from specific demographics, the result will likely perpetuate existing stereotypes and inequalities. There are cases where social media platforms have faced backlash due to biased AI algorithms that disproportionately target marginalized communities. Several organizations have proposed frameworks to mitigate these biases, emphasizing the importance of diverse datasets, regular audits, and inclusive practices. Ensuring that a broad range of perspectives is considered during the development of AI systems helps to identify and correct any biases early in the process. Furthermore, involving ethicists, technologists, and community representatives can create a more equitable technological landscape. Ethical AI is not just about performance; it also encompasses fairness. All stakeholders must collaborate to establish standards and protocols governing AI use in law enforcement to prevent discrimination and enhance accountability.

The ethical implications of AI in social media law enforcement extend to the need for transparency and accountability in algorithms and their application. Citizens have a right to know how their data is utilized and the criteria upon which decisions affecting them are made. This transparency can lead to increased trust between law enforcement and the communities they serve and provide a means for individuals to challenge decisions made by AI. Furthermore, accountability must be addressed through clear guidelines that define roles and responsibilities among developers, users, and law enforcement agencies. Without proper accountability mechanisms, it becomes difficult to address potential abuse or errors resulting from AI systems. A collaborative approach should be considered, involving policymakers, technologists, and civil society spokespersons, to adapt and create regulatory frameworks that govern the use of AI in law enforcement. Educating the public about their rights regarding AI usage and data privacy can empower individuals to seek clarification or file complaints when necessary. Ultimately, a well-informed society can drive demand for ethical practices in AI-related law enforcement activities.

Global Perspectives on AI Ethics in Law Enforcement

Many countries around the world are grappling with the ethical implications of AI in law enforcement, leading to varied responses across different jurisdictions. In some regions, strict regulations have been implemented to curtail the misuse of AI tools, while others have embraced technology with few restrictions. For instance, in the European Union, data protection regulations such as GDPR emphasize individual rights and stringent conditions on data processing. These protective measures highlight the need for accountability and transparency in AI, especially concerning law enforcement applications. Comparatively, in the United States, discussions surrounding the regulation of AI in this context remain fragmented, with some jurisdictions pushing for comprehensive laws while others lack clear guidelines. Within these differing approaches, there is a growing movement advocating for ethical AI practices globally. Additionally, collaborative efforts and knowledge sharing among countries can catalyze the development of international standards for AI application in law enforcement. Establishing norms and standards governing AI is essential to protect civil liberties while leveraging technology to enhance public safety, reflecting the necessity for a unified approach to AI ethics globally.

The intersection of social media, AI, and law enforcement will likely evolve, thus necessitating ongoing ethical considerations. As AI applications continue to permeate different aspects of law enforcement, practitioners will need to remain vigilant about the ethical implications of their work. Continuous education regarding evolving ethical standards and legal frameworks is vital for law enforcement officials and technology developers alike. Additionally, as social media platforms refine their AI capabilities, they should also prioritize ethical oversight and community engagement to foster healthier interactions between technology and society. Constructive dialogue involving diverse stakeholders can lead to sustainable solutions that uphold human rights while maximizing safety. This also requires a commitment to ethical oversight and responsible development practices that prevent harm. Building public trust is fundamental to successful law enforcement engagements. Ultimately, as technology advances, the imperative to balance ethical considerations with the potential benefits of AI in social media law enforcement cannot be overstated. Community participation, ethical training, and transparency can lead to innovative use of technology that enhances safety without sacrificing ethical responsibility.

Conclusion: The Path Ahead

Looking forward, navigating the ethical implications of AI in social media law enforcement will require concerted efforts among technologists, policymakers, and the public. Continuous dialogue on best practices and evolving ethical standards is crucial to ensure that AI serves as a tool for justice rather than a source of harm. Establishing clear guidelines and accountability mechanisms will be essential to address the concerns surrounding privacy, bias, and discrimination. Public engagement and advocacy will play crucial roles in shaping the approaches to AI in law enforcement, creating a more equitable landscape. Furthermore, fostering a culture of ethical innovation among developers offers great potential to mitigate risks while enhancing capabilities. To prevent misuse of AI technologies, societies must emphasize their shared values of fairness and justice. Legal reforms may also be necessary to adapt to the fast-paced technological advancements. Such reforms should incorporate stakeholders’ views and experiences, recognizing the multiplicity of voices within communities affected by law enforcement practices. Thus, a collaborative approach, grounded in ethical considerations, will pave the way for employing AI responsibly while safeguarding fundamental rights and liberties in our increasingly interconnected world.

In summary, the ethical implications of AI in social media law enforcement require a multidisciplinary approach that prioritizes human rights. As various stakeholders engage in this critical discourse, it is essential to balance technological advancements with ethical principles. Policymakers must strive for regulations that not only advance public safety but also protect individual rights and freedoms. The global conversation surrounding AI ethics compels a re-evaluation of existing practices within law enforcement, underscoring the need for transparency, accountability, and non-discrimination. In addition, continuous education and stakeholder involvement will play a pivotal role in forming ethical standards that inform AI development. Engaging communities in these discussions can build trust and enhance collaboration, ultimately benefiting society as a whole. Social media platforms hold immense responsibility in how they implement AI in line with ethical dilemmas; thus proactive responsibility is essential in delivering solutions. Future innovations in AI must consider the ethical dimensions that accompany them, ensuring they contribute positively to society. As the landscape evolves, so too should our commitment to safeguarding civil rights, advocating for fairness, and utilizing technology as an instrument of progress toward safer communities.