How Algorithmic Promotion of Extreme Content Impacts Mental Health

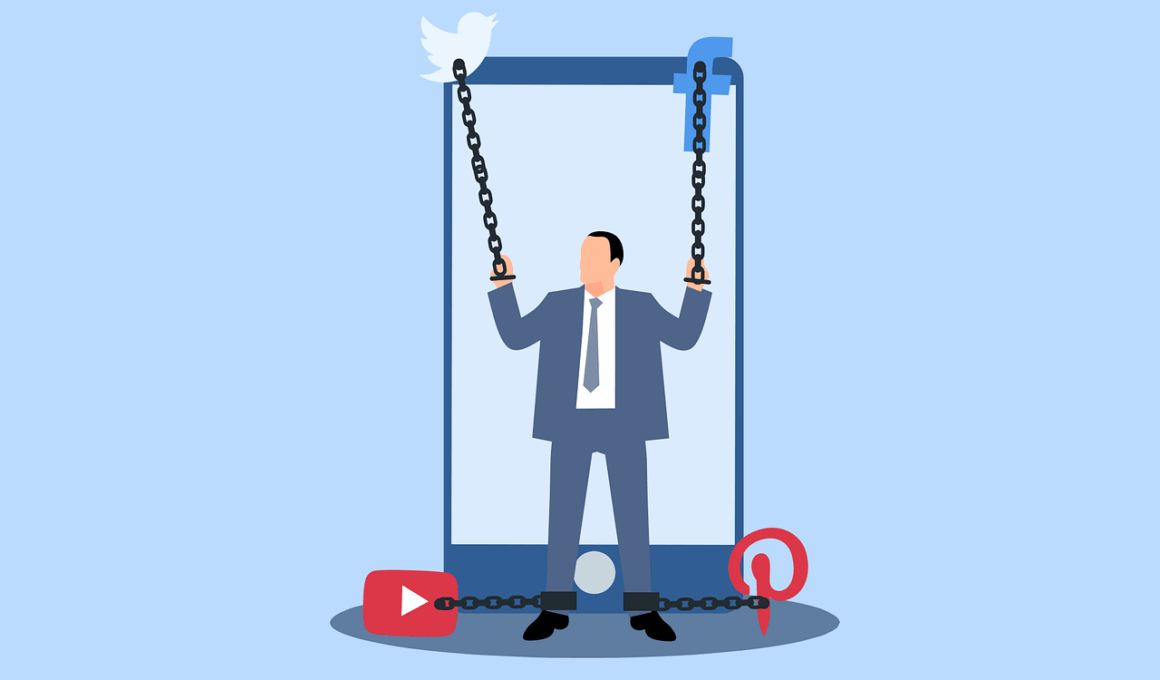

Social media platforms utilize algorithms to decide which content is promoted to users. This often leads to the amplification of extreme, sensational, or polarizing content. Such promotion can have profound implications on the mental health of users, particularly vulnerable individuals. The echo chamber effect created by these algorithms leads to increased exposure to negative viewpoints. This can reinforce harmful beliefs and provoke feelings of anxiety among users. Prolonged exposure may contribute to a more significant mental health crisis, as users often feel isolated in their beliefs. Understanding the role of algorithms in shaping user experience is crucial for addressing these issues. Research shows that users engaging with extreme content are more likely to experience depression and other mental health disorders. As users scroll through their feeds, they may unknowingly contribute to a cycle that promotes hatred, prejudice, and intolerance. To mitigate these negative effects, users and platform developers should consider the ethical dimensions of algorithmic promotion. Increased awareness can guide better design choices, ultimately fostering a healthier online space.

Isolation and Loneliness

One result of algorithmically driven extreme content is increased isolation and loneliness. Users may feel disconnected from friends and family when their feeds mainly reflect extreme opinions. This isolation can drive users towards more extreme thoughts, creating a feedback loop that is detrimental to mental well-being. Furthermore, users might start feeling that their perspectives are unique or marginalized, fostering a sense of alienation. As their online interactions become less human and more combative, mental health issues such as anxiety increase significantly. Loneliness is not just an emotional state but can lead to serious health issues, including stress and depression. Understanding these facets highlights the need for creating features that promote positive interactions instead. Social media platforms must consider these impacts as they design algorithms. Encouraging diverse viewpoints could alleviate feelings of isolation. Platforms can foster connections among users, enabling more supportive online experiences. Users should also take proactive steps by curating their feeds to ensure they are exposed to a variety of viewpoints rather than echo chambers of extreme content. This shift could have a positive influence on mental health.

Another way that algorithmic promotion affects mental health is through the normalization of distressing content. When users frequently interact with extreme or distressing material, they become acclimated to that content, desensitizing them to violence or suffering. This desensitization may reduce empathy and compassion, making it harder to relate to the emotional struggles of others. Over time, individuals may lose sight of healthier emotional responses, leading to a societal shift towards apathy. Young users are particularly susceptible, as they are often still developing their emotional frameworks. The implications are concerning, as compassion and empathy are crucial for building supportive communities. When users primarily consume content that normalizes negativity, they contribute to a culture that sees mental distress as a typical state. It is essential for social media platforms to recognize the influence of the content they promote. Creating systems that discourage extreme content while rewarding positive interactions can reshape user experiences. Users also have a role in reshaping their consumption by choosing platforms and content that prioritize mental well-being. This shift could lead to healthier interactions.

Impact on Self-Image

Algorithm-borne extreme content can significantly skew users’ self-image and body perceptions. Platforms often promote unrealistic depictions of beauty and success, causing users to grapple with feelings of inadequacy. Regular exposure to curated feeds can foster negative comparisons, leading to diminished self-esteem. Users may begin to believe that their lives do not stack up to these distorted images, inciting dissatisfaction with their own existence. When algorithms prioritize likes and shares over authentic content, the resulting environment becomes toxic for many young people. Issues related to body image, eating disorders, and other mental health concerns have been exacerbated by social media influences. The disparity between the idealized lives presented online and the mundane reality many experience fosters anxiety and self-doubt. This presents a clear need for platforms to consider their role in shaping perceptions about self-worth. Balancing engagement-driven algorithms with ethical considerations could help create healthier user experiences. Furthermore, education about media literacy can empower users to critically assess the content they consume. By promoting such discussions, users are more equipped to navigate their online worlds positively.

Along with these issues, the promotion of extreme content can lead to increased aggression among users. Users exposed to inflammatory or divisive material are more likely to display hostile behaviors online. This can create an environment where bullying flourishes, affecting the mental health of victims. Emotional dysregulation, often linked with exposure to negative content, can drive users to act out in harmful ways. It can lead to volatile interactions that further exacerbate feelings of anxiety and depression in individuals targeted by aggressive posts. Both directly and indirectly, aggressive online environments can lead individuals to feel unsafe, pushing them away from their social networks. This battle for emotional safety highlights the need for critical evaluation of content management practices on social media. Fostering kindness and empathy within online communities can have long-lasting positive effects. Implementing strategies that prioritize positivity can help mitigate the ill effects of extreme content. Users should advocate for improvements in content moderation that aligns with mental health standards, ensuring all voices feel heard. Together, we can build a safer space for constructive dialogues.

Recommendations for Users

Users can take proactive measures to mitigate the adverse effects of algorithmically promoted extreme content. Curating feeds to reflect diverse perspectives is a powerful strategy for improving mental health. Engaging with content that challenges beliefs promotes critical thinking and reduces the dangers of echo chambers. Users should evaluate their emotional reactions to the content they consume, asking whether it uplifts or brings them down. Taking regular breaks from social media is another effective strategy to foster well-being. Just stepping away can significantly decrease anxiety levels. Searching for platforms that prioritize mental health and well-being over engagement can enhance user experiences. Moreover, users can utilize tools that allow them to filter out negative content or strongly opinionated discussions. By focusing on authentic connections and fostering positive communities, a healthier digital environment can emerge. Involving oneself in discussions around media literacy opens avenues for reflection and growth. Users should share their experiences of how extreme content has influenced their lives. Such sharing can break stigmas, promoting healthier interactions online while fostering a sense of community.

Lastly, advocacy for change within social media platforms is vital for addressing the mental health impacts of algorithmic promotion. Users can rally for more transparency about algorithm functionalities and their effects on users. Campaigning for greater accountability in content moderation practices is essential as society grapples with mental health challenges. Encouraging platforms to invest in user education on responsible usage can help. This may include workshops, tutorials, and informative content that foster a better understanding of interactions online. Furthermore, supporting research initiatives focused on social media impacts can shape policies in favor of mental health. Elected representatives should prioritize laws that regulate platform practices related to algorithmic content promotion. By standing united, users can push for essential changes that enhance mental health outcomes. Building awareness about these issues empowers individuals to become advocates, effecting change in how platforms operate. Collectively, these actions can lead to healthier online experiences. Understanding the root of these problems enables users to navigate their digital realms more effectively, thus promoting mental well-being.

Conclusion

In conclusion, the algorithmic promotion of extreme content on social media poses significant challenges to mental health. Understanding how these dynamics shape experiences is crucial for users and platform developers alike. As users become more aware of their digital interactions, they can advocate for healthier practices, both personally and collectively. Creating an environment that fosters empathy, kindness, and diverse perspectives is essential. The role of algorithms must be re-evaluated to prioritize mental well-being over merely engaging content. By taking proactive steps, users may reclaim their mental health and reshape their online realities. This societal shift necessitates collaboration and awareness on individual levels, emphasizing the importance of responsible media consumption. Together, through awareness and action, we can combat the troubling impacts of extreme content and build a healthier social media landscape that supports mental health.